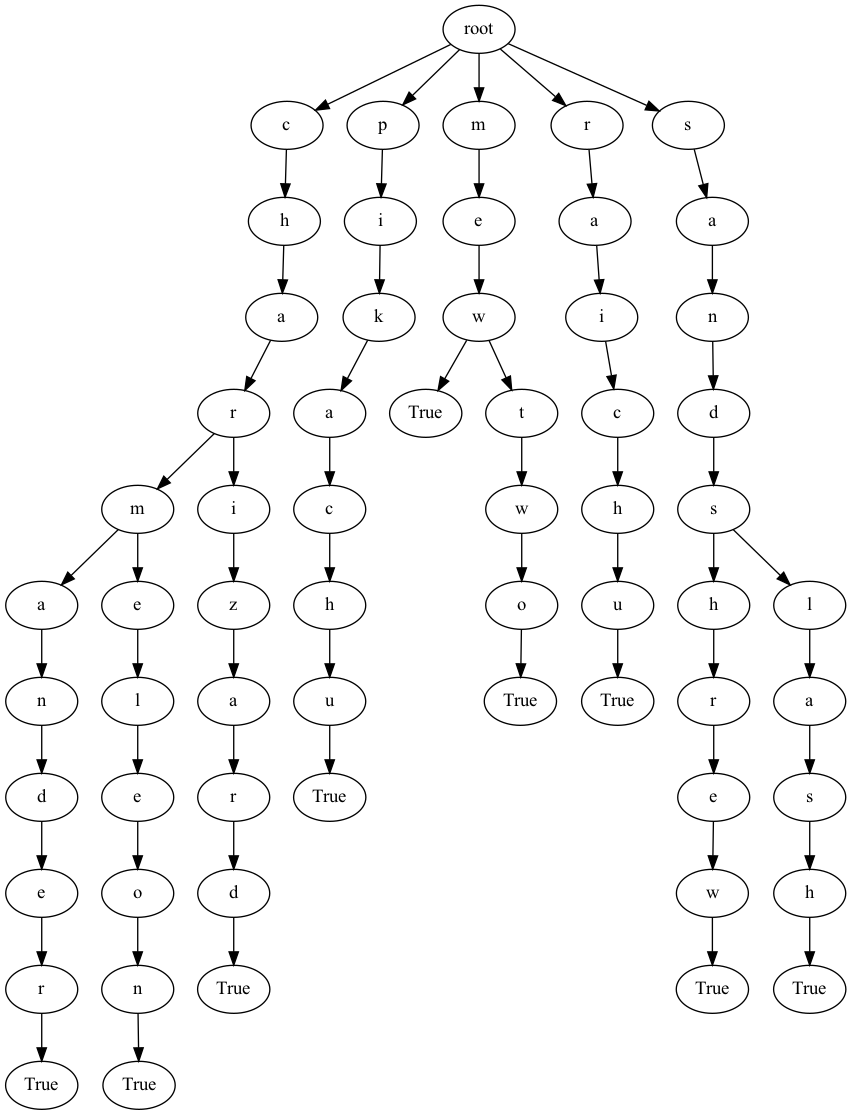

Exploring the Role of Activation Functions in Deep Learning

My Senior Capstone Project at Fresno State investigated activation functions in deep neural networks. Typically, activation functions are overlooked when designing and modifying neural network models, but we wanted to better understand how they can affect the performance of neural networks. Initially, we ran experiments with image classification tasks, in which we tested five different activation functions (ReLU, Swish, Mish, TAct, and mTAct) on a variety of models in addition to tuning several hyperparameters (number of epochs, learning rate, etc.).

Unfortunately, there was no clear, definitive pattern between any particular activation function and accuracy of the model. After I graduated, we decided to pursue other tasks within deep learning to test these activation functions. As of now, I have two extensions to my capstone project: one involving Natural Language Processing (NLP), and one involving Graph Neural Networks (GNNs). The NLP extension tests the activation functions on a sentiment analysis task with movie review data, while the GNN extension tests the activation functions on a molecular odor classification task (in which the molecules are represented as graphs). We hope to find definitive patterns between activation functions and the accuracy of the model before we publish our results.

Stack: Python, PyTorch, CUDA, Bash

Image Source: https://analyticsindiamag.com/wp-content/uploads/2018/12/nural-network-banner.gif